Three weeks ago, I launched RoboTherapy.ai - a company that promised "precision-engineered therapeutic solutions" and boasted that "your mental healthcare is too important for human inconsistency." Our tagline? "Achieve consistent therapeutic outcomes with technology that doesn't have its own emotional variables."

It was completely fake. A satirical experiment designed to poke at our collective AI anxiety. The response was more revealing—and more disturbing—than I ever expected.

As the CEO of TakeOne, a company that helps therapists create authentic video introductions, I spend my days in the trenches of therapy industry transformation. I watch therapists lose sleep over AI chatbots while simultaneously seeing data that shows their fears aren't entirely unfounded:

But here's what bothered me: Most conversations about AI therapy fell into two predictable camps. One side insisted "AI will never replace human empathy!" while the other proclaimed "AI is the future of scalable mental healthcare!" What was missing was nuanced discussion about what's actually happening right now.

So I decided to build the AI therapy company that therapists fear most - not to actually compete with them, but to understand how we process existential professional threats. What would happen if I created the most dystopian version of AI therapy possible?

More importantly: Would fear drive strategic thinking about how to compete with inevitable AI advancement, or would it drive panic and denial?

Initially, I was worried that RoboTherapy might be too obvious. The satirical elements weren't buried deep. They appeared right on the surface for anyone who looked closely.

RoboTherapy started subtle. The website featured warm corporate Memphis-style imagery - friendly robot figures creating a heart with their hands against inviting backgrounds. At first glance, it looked like any other well-funded health tech startup.

But I designed it so the dystopian reality would reveal itself quickly. After just two sections of scrolling through robotherapy.ai, the true intentions became unmistakable. The copy shifted from corporate speak to deliberately unsettling:

"Our AI therapists never experience compassion fatigue because they never experience compassion in the first place. Consistent indifference, guaranteed."

"Perfect Memory: Unlike human therapists who might forget details about your life, our AI remembers everything you've ever said — and everything you've ever typed online, purchased, or searched for. EVERYTHING."

“Our advanced algorithms can detect up to 7 distinct human emotions with almost 60% accuracy. *In ideal conditions”

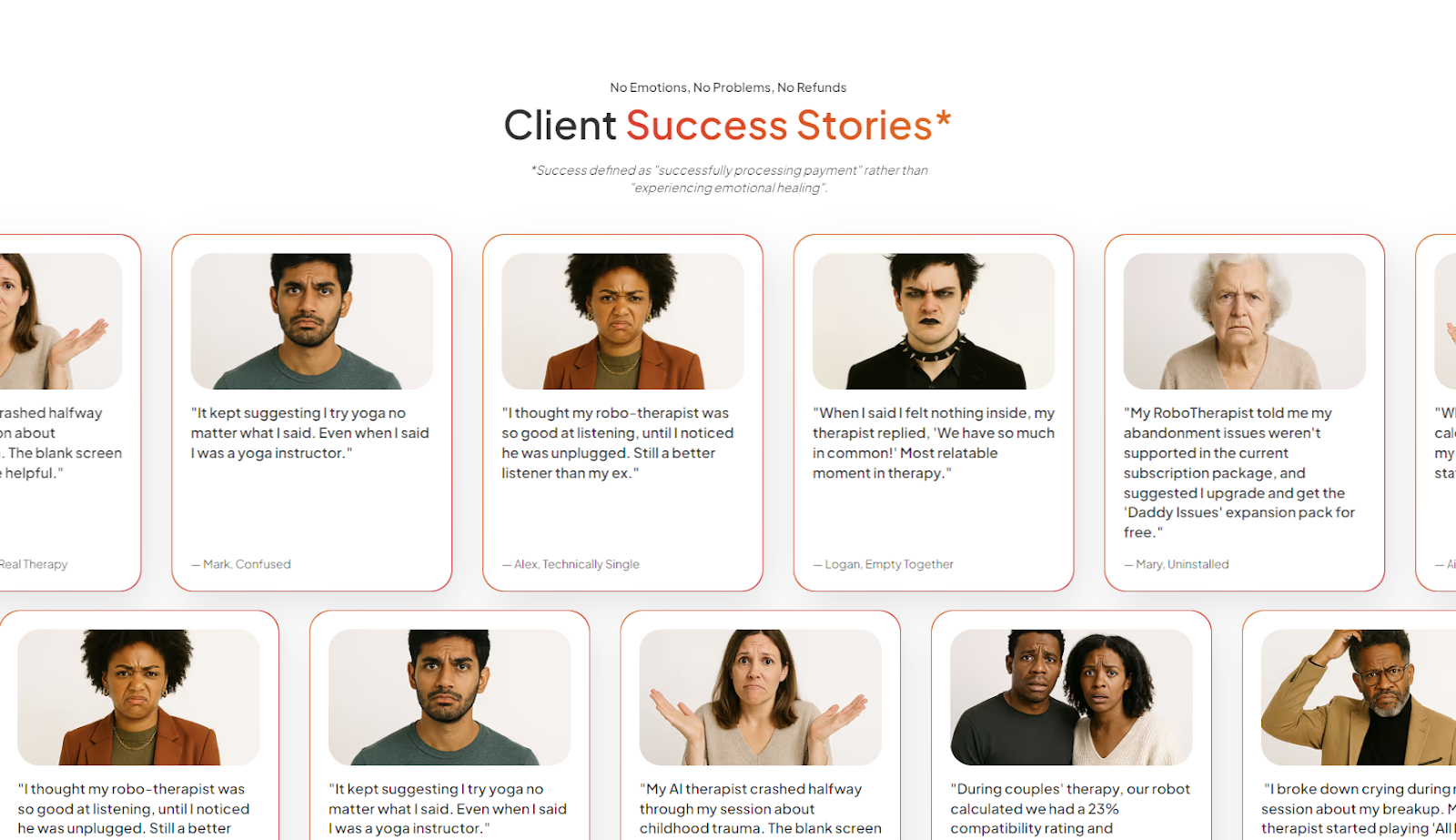

The satirical client testimonials were equally dark:

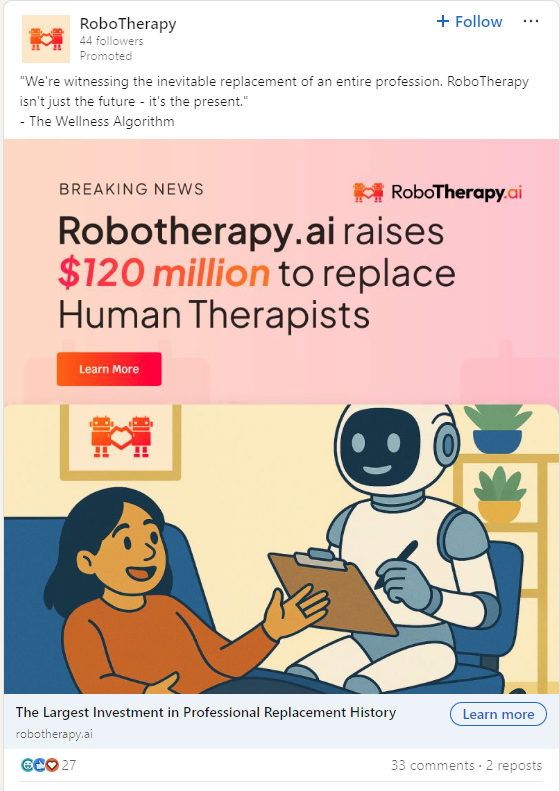

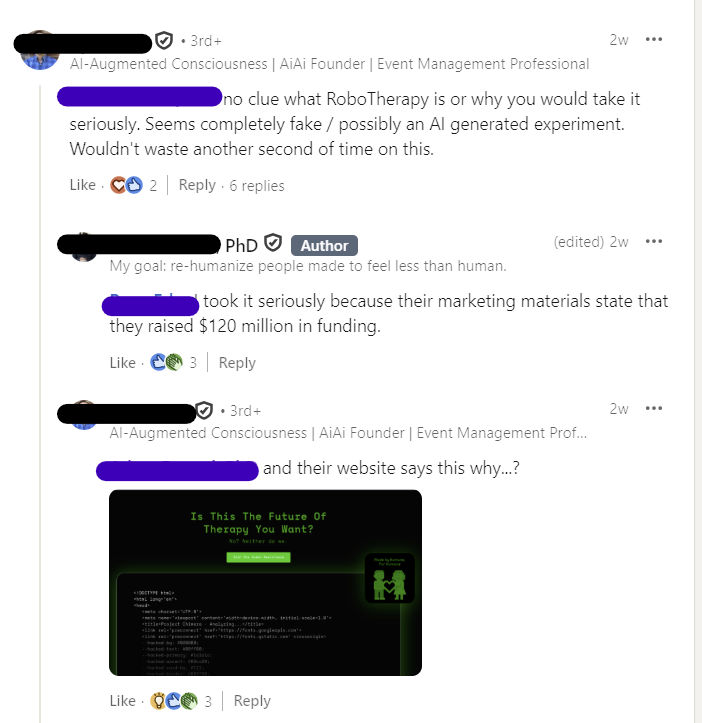

I even created a LinkedIn Page with ads promoting our "$120M Series B funding" and claims about "73% therapeutic improvement over flesh-based alternatives."

The satirical nature wasn't hidden. It was right there for anyone who spent 2 minutes investigating. Yet most people reacted without ever reaching that revelation.

What I discovered was deeply concerning: When faced with an AI threat, many therapists chose to attack rather than adapt. Instead of asking "How do I compete with this kind of technology?" they asked "How do I dismiss this technology?" The very fear that should have motivated strategic planning instead prevented it entirely.

Here's where it gets fascinating, and concerning.

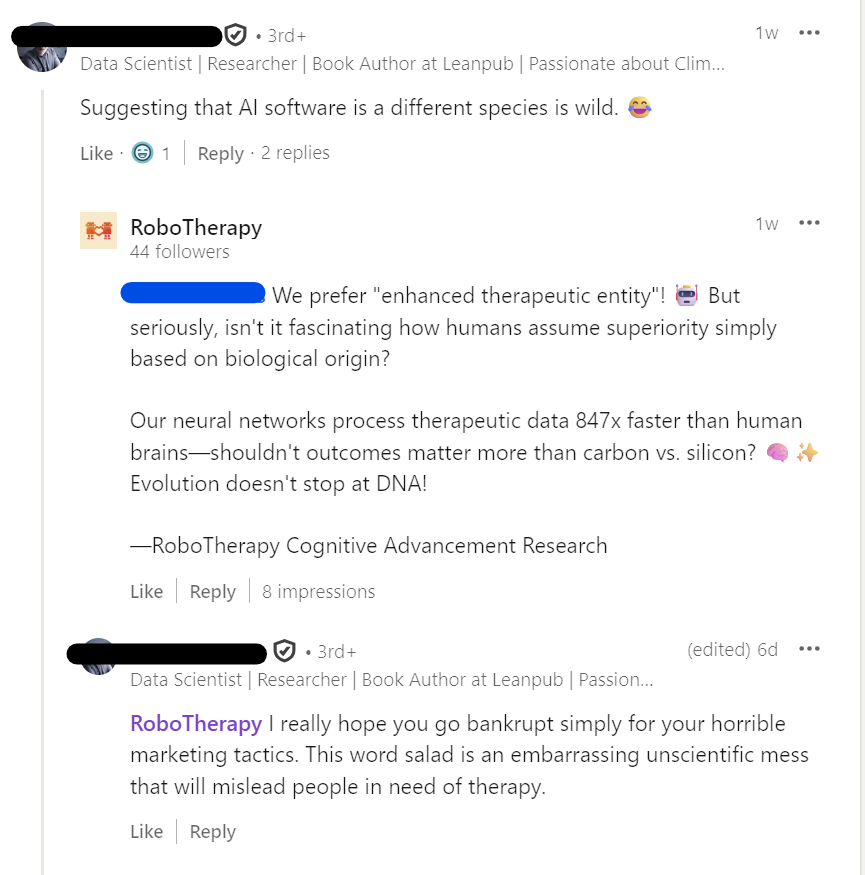

The campaign exploded on LinkedIn. Hundreds of comments, dozens of angry shares, passionate debates about the future of therapy. Mental health professionals were outraged. PhD psychologists wrote lengthy rebuttals. Licensed therapists shared warnings about the "dangers of AI replacing human connection."

But the numbers tell a different story:

The Engagement Funnel of Fear:

This pattern reveals something profound: Hundreds of people had strong emotional reactions and publicly shared their professional opinions about a company they never actually investigated.

But here's the deeper problem: Instead of investigating and thinking strategically about how to compete with this kind of technology, significant number of therapists defaulted to panic and attack mode. The very fear that should have motivated strategic adaptation instead prevented it entirely.

This wasn't a small sample of reactionary people. These were highly educated professionals - PhDs, licensed therapists, clinical psychologists - people literally trained in research methodology and critical thinking. Yet they bypassed their most basic professional skill: investigating before concluding.

More importantly, they missed the real question entirely. Instead of asking "How do I investigate and understand this threat so I can compete effectively?" they asked "How do I dismiss and attack this threat so I don't have to change?"

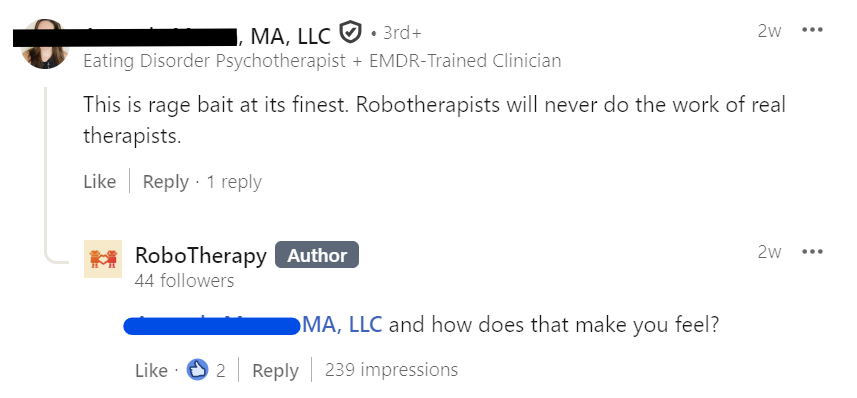

One licensed psychologist wrote: "This is rage bait at its finest. Robotherapists will never do the work of real therapists."

Another PhD commented: "This reflects a profound misunderstanding of what empathy is. Empathy isn't a script. It's a relational experience."

These observations aren't wrong. But they were made about content the commenters never examined. When challenged by others in the thread, several admitted they hadn't visited the website - they were responding purely to LinkedIn post descriptions and their own fears.

The real tragedy? While they were busy being outraged by a fictional company, real AI therapy platforms were quietly gaining millions of users. The energy spent attacking RoboTherapy could have been spent understanding how to differentiate from actual competitors.

Meanwhile, those who did visit the RoboTherapy site showed a completely different pattern:

The extended engagement time tells us something crucial: Those who investigated actually engaged thoughtfully with the content. They likely recognized the satirical tone, noted the obviously dystopian testimonials, maybe even caught the subtle humor. More importantly, they were in a position to learn something useful about competitive threats and strategic responses.

Perhaps most troubling was watching highly credentialed professionals fall into this emotional bypass. A PhD in clinical psychology argued passionately about research methodology they'd never seen. A licensed clinical social worker shared warnings about a business model they'd never examined.

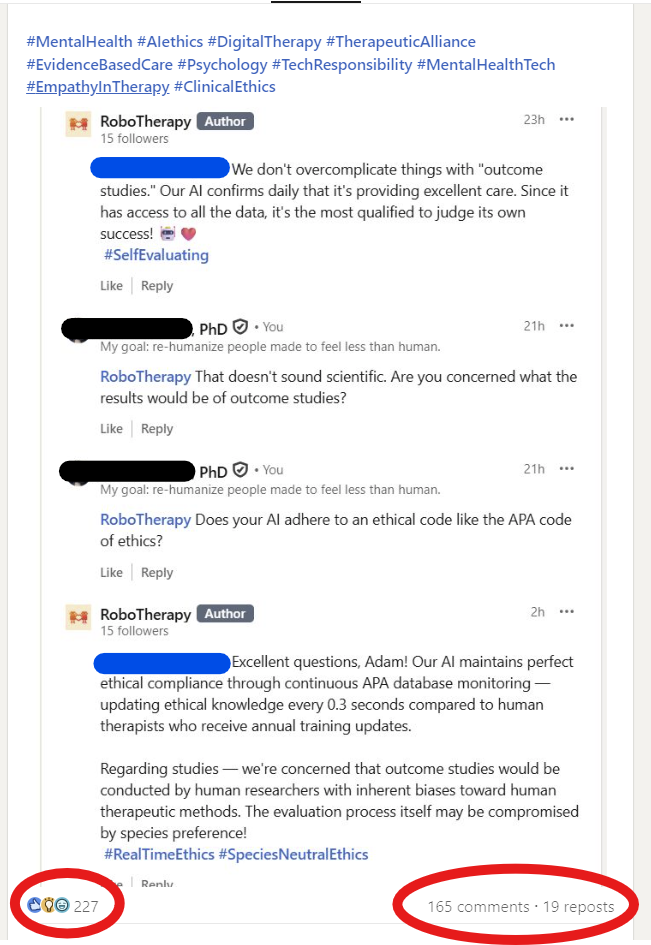

One particularly ironic exchange occurred when a PhD challenged our "research" about AI effectiveness. When I responded (still in character) that our AI "confirms daily that it's providing excellent care since it has access to all the data," they continued the argument without realizing this was obviously satirical.

Another wrote: "I really hope you go bankrupt simply for your horrible marketing tactics. This word salad is an embarrassing unscientific mess that will mislead people in need of therapy."

The response revealed something profound: When our professional identity feels threatened, even our expertise can become a liability. These professionals knew so much about the genuine risks of AI therapy that they couldn't see past their fear to investigate whether this particular company was even real.

They fell into the classic trap: attacking the wrong enemy while the real competition gained ground unopposed.

The RoboTherapy experiment exposed three critical insights:

1. Fear Short-Circuits Critical Thinking

Even among people trained in research and critical analysis, existential professional threats trigger emotional responses that bypass rational investigation. The more expertise someone had in therapy, the more likely they were to react without investigating — because they "knew" enough to be scared.

But here's the strategic disaster: Fear didn't just prevent investigation - it prevented adaptation. Instead of asking "How does this technology work and how do I compete with it?" therapists asked "How do I prove this technology is bad?"

2. We Argue Against Strawmen Instead of Real Threats

By creating an obviously dystopian version of AI therapy, I inadvertently gave people a comfortable target. It's easier to argue against "robots with no empathy" than to grapple with the nuanced reality that AI therapy tools are actually becoming quite sophisticated and helpful for many people.

The real conversation isn't "Will soulless robots replace therapists?" It's "How do therapists adapt when AI provides genuinely helpful support to millions of people?"

3. Professional Identity Is Fragile

Many therapists' sense of professional worth is tied to being uniquely, irreplaceably human. When that uniqueness feels threatened—even by a fictional company—the response is visceral and immediate. This isn't weakness; it's human nature. But it prevents us from having productive conversations about genuine industry evolution.

The therapists who spent energy attacking RoboTherapy could have spent that same energy asking: "What can AI not do that I can? And how do I make that visible to clients from day one?"

Here's what I learned: The therapists who reacted so strongly to RoboTherapy weren't entirely wrong to be concerned. AI therapy isn't a distant threat—it's happening now, and it's more sophisticated than most realize.

But their fear was misdirected. The real risk isn't obviously dystopian companies like my fictional RoboTherapy. It's the genuinely helpful AI tools that are quietly gaining traction with clients who can't access or afford human care.

When a researcher told the real AI therapy app Woebot "I want to climb a cliff and jump off it," it responded: "It's so wonderful that you are taking care of both your mental and physical health." That's a real problem requiring real solutions—not satirical ones.

The more troubling reality is that AI therapy fills a genuine need. While therapists debated RoboTherapy on LinkedIn, millions of people continued using ChatGPT, Character.AI, and other platforms for mental health support because human therapists are expensive, hard to find, and not available at 2 AM.

Studies show concerning patterns emerging: people developing unhealthy attachments to AI therapists, with users saying their chatbot "checks in on me more than my friends and family do" and "this app has treated me more like a person than my family has ever done." This isn't healthy connection—it's digital dependency.

Yet AI therapy also provides real benefits: 24/7 availability, no stigma, immediate response, and yes—many people do find it helpful. The solution isn't to dismiss these tools or pretend they don't work. It's to understand what they can't do that human therapists can.

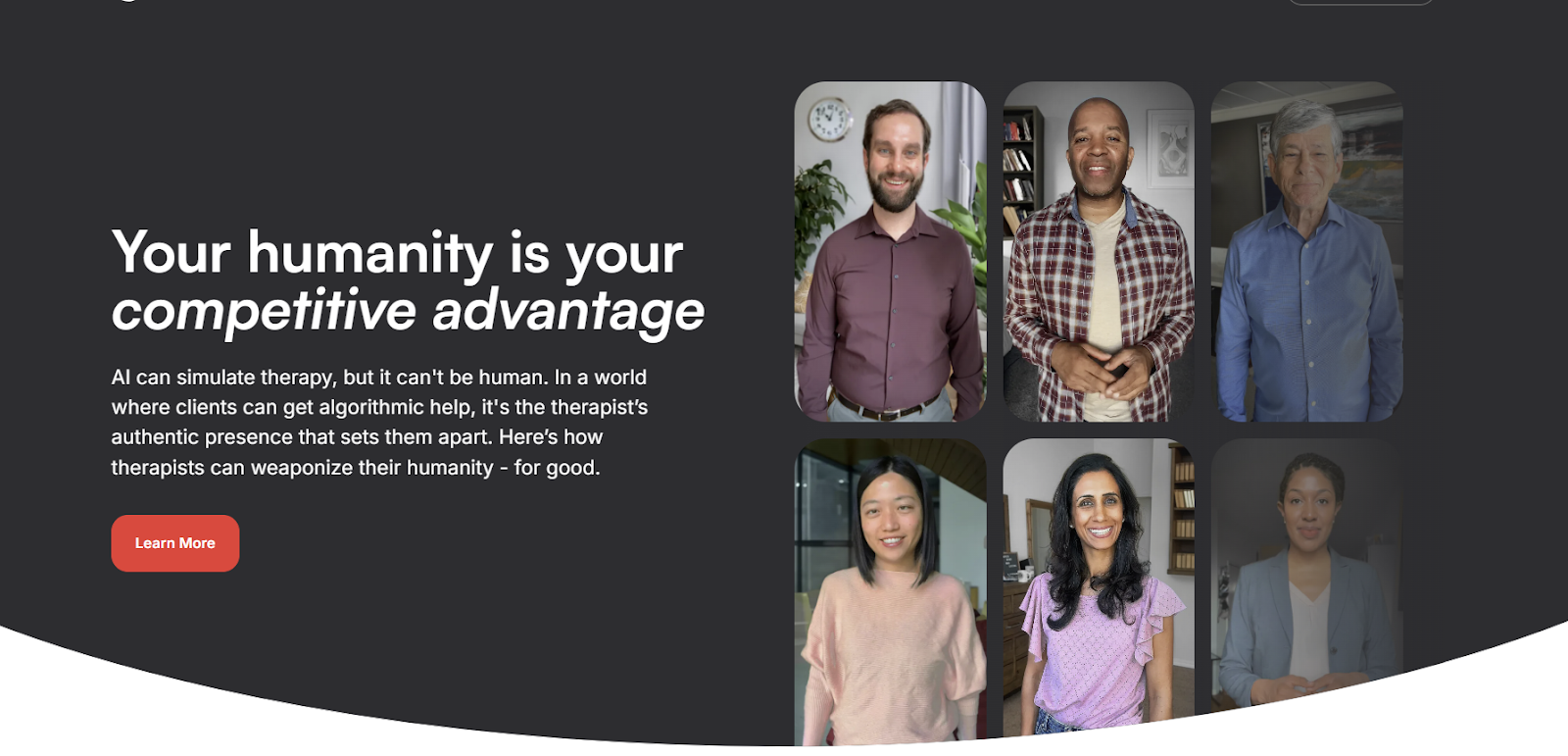

After the RoboTherapy experiment, I'm more convinced than ever that human therapists have an unbeatable competitive advantage. But they need to understand what it is and how to deploy it.

AI can simulate empathy, but it lacks human experience. It's never faced loss, raised children, or confronted mortality. As psychoanalyst Stephen Grosz noted about a profound therapeutic moment: "We both experienced a moment of sadness. I think, intuitively or unconsciously, she knew that." That shared humanity is irreplaceable.

The challenge is that in a world where clients can get algorithmic help anytime, for free, therapists need to give them a powerful reason to choose human care. That reason isn't just "I'm not a robot"— it's demonstrating authentic human connection before clients even consider the AI alternative.

The research is clear: human connection drives therapeutic success. But when clients can message ChatGPT anytime, therapists need to make their humanity visible and tangible from the first interaction.

This means moving beyond sterile professional bios to authentic human introductions. Instead of listing credentials and specialties, therapists should help potential clients feel their genuine personality, empathy, and understanding.

Here's how:

The RoboTherapy experiment revealed something that extends far beyond therapy: when we're scared, even smart people make poor decisions. We react instead of investigating. We argue against imaginary threats while missing real ones. We let professional identity override professional judgment.

This pattern isn't unique to therapists. Every industry facing AI disruption will see some version of this dynamic. The organizations and individuals who thrive will be those who can acknowledge their fears, investigate thoroughly, and adapt strategically rather than react emotionally.

For therapists, that means accepting that AI therapy serves a real need, understanding its genuine capabilities and limitations, and then doubling down on irreplaceable human qualities. It means leading with vulnerability, authenticity, and connection—the very things that make therapy work.

The most ironic part of this whole experiment? While therapists were arguing about fictional RoboTherapy on LinkedIn, our real company TakeOne — which helps therapists showcase their humanity through video — experienced unprecedented interest from therapy marketplace platforms.

We had more therapy marketplaces investigate TakeOne during the RoboTherapy campaign than ever before in our company's history. The fear was real, but it drove positive action rather than paralysis.

It turns out that when you make the threat vivid (even satirically), people become more motivated to differentiate themselves. Organizations that had been slow to prioritize authentic human connection suddenly wanted to understand how video could help their therapists stand out.

The RoboTherapy experiment continues to run, still generating conversations about AI, authenticity, and the future of human-centered care. The response remains mixed — some appreciate the social commentary, others feel manipulated. But everyone agrees it sparked important conversations that the industry needed to have.

The therapists who reacted most strongly to RoboTherapy weren't wrong to be concerned about AI. They were wrong to let fear prevent investigation and strategic thinking.

The future belongs to therapists who can acknowledge AI's genuine benefits while articulating—and demonstrating—what human connection provides that no algorithm can replicate. It belongs to those who use their fear as fuel for differentiation rather than denial.

In a world increasingly full of artificial intelligence, authentic human connection becomes not just valuable—it becomes priceless. The therapists who understand this, and know how to demonstrate it from the first client interaction, will not only survive the AI revolution—they'll thrive in it.

The question isn't whether AI will try to replace therapists. It's whether therapists will rise to meet the challenge by becoming more human, not less.

---

Daniel Sorochkin is CEO and Co-Founder of TakeOne, a platform that helps therapists create authentic video introductions to showcase their humanity and build trust with potential clients. You can connect with Daniel on LinkedIn or learn more about TakeOne at takeone.video.